Читайте также:

|

From this point on, let us call any system capable of generating a conscious self an Ego Machine. An Ego Machine does not have to be a living thing; it can be anything that possesses a conscious self-model. It is certainly conceivable that someday we will be able to construct artificial agents. These will be self-sustaining systems. Their self-models might even allow them to use tools in an intelligent manner. If a monkey’s arm can be replaced by a robot arm and a monkey’s brain can learn to directly control a robot arm with the help of a brain-machine interface, it should also be possible to replace the entire monkey. Why should a robot not be able to experience the rubber-hand illusion? Or have a lucid dream? If the system has a body model, full-body illusions and out-ofbody experiences are clearly also possible.

In thinking about artificial intelligence and artificial consciousness, many people assume there are only two kinds of information-processing systems: artificial ones and natural ones. This is false. In philosophers’ jargon, the conceptual distinction between natural and artificial systems is neither exhaustive nor exclusive: that is, there could be intelligent and / or conscious systems that belong in neither category. With regard to another old-fashioned distinction — software versus hardware — we already have systems using biological hardware that can be controlled by artificial (that is, man-made) software, and we have artificial hardware that runs naturally evolved software.

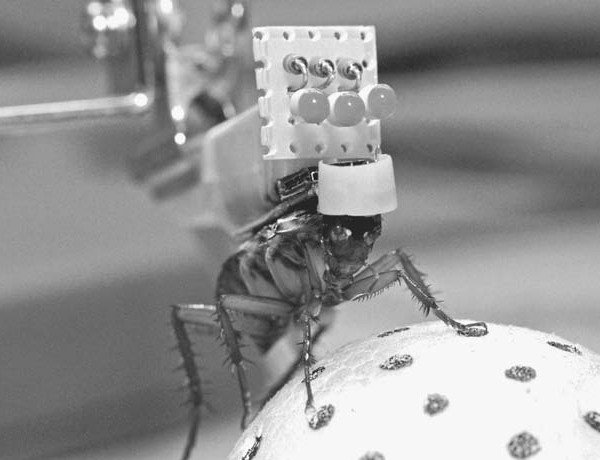

Figure 16: RoboRoach. Controlling the movements of cockroaches with surgically implanted microrobotic backpacks. The roach’s “backpack” contains a receiver that converts the signals from a remote control into electrical stimuli that are applied to the base of the roach’s antennae. This allows the operator to get the roach to stop, go forward, back up, or turn left and right on command.

Hybrid biorobots are an example of the first category. Hybrid biorobotics is a new discipline that uses naturally evolved hardware and does not bother with trying to re-create something that has already been optimized by nature over millions of years. As we reach the limitations of artificial computer chips, we may increasingly use organic, genetically engineered hardware for the robots and artificial agents we construct.

An example of the second category is the use of software patterned on neural nets to run in artificial hardware. Some of these attempts are even using the neural nets themselves; for instance, cyberneticists at the University of Reading (U.K.) are controlling a robot by means of a network of some three hundred thousand rat neurons.1 Other examples are classic artificial neural networks for language acquisition or those used by consciousness researchers such as Axel Cleeremans at the Cognitive Science Research Unit at Université Libre de Bruxelles in Belgium to model the metarepresentational structure of consciousness and what he calls its “computational correlates.”2 The latter two are biomorphic and only semiartificial information-processing systems, because their basic functional architecture is stolen from nature and uses processing patterns that developed in the course of biological evolution. They create “higher-order” states; however, these are entirely subpersonal.

We may soon have a functionalist theory of consciousness, but this doesn’t mean we will also be able to implement the functions this theory describes on a nonbiological carrier system. Artificial consciousness is not so much a theoretical problem in philosophy of mind as a technological challenge; the devil is in the details. The real problem lies in developing a non-neural kind of hardware with the right causal powers: Even a simplistic, minimal form of “synthetic phenomenology” may be hard to achieve — and for purely technical reasons.

The first self-modeling machines have already appeared. Researchers in the field of artificial life began simulating the evolutionary process long ago, but now we have the academic discipline of “evolutionary robotics.” Josh Bongard, of the Department of Computer Science at the University of Vermont, and his colleagues Victor Zykov and Hod Lipson have created an artificial starfish that gradually develops an explicit internal self-model.3 Their four-legged machine uses actuation-sensation relationships to infer indirectly its own structure and then uses this selfmodel to generate forward locomotion. When part of its leg is removed, the machine adapts its self-model and generates alternative gaits — it learns to limp. Unlike the phantom-limb patients discussed in chapter 4, it can restructure its body representation following the loss of a limb; thus, in a sense, it can learn. As its creators put it, it can “autonomously recover its own topology with little prior knowledge,” by constantly optimizing the parameters of its resulting self-model. The starfish not only synthesizes an internal self-model but also uses it to generate intelligent behavior.

Self-models can be unconscious, they can evolve, and they can be created in machines that mimic the process of biological evolution. In sum, we already have systems that are neither exclusively natural nor exclusively artificial. Let us call such systems postbiotic. The likely possibility is that conscious selfhood will first be realized in postbiotic Ego Machines.

Figure 17a: Starfish, a four-legged robot that walks by using an internal self-model it has developed and which it continuously improves. If it loses a limb, it can adapt its internal self-model.5

Дата добавления: 2015-10-31; просмотров: 169 | Нарушение авторских прав

| <== предыдущая страница | | | следующая страница ==> |

| THE SHARED MANIFOLD: A CONVERSATION WITH VITTORIO GALLESE | | | HOW TO BUILD AN ARTIFICIAL CONSCIOUS SUBJECT AND WHY WE SHOULDN’T DO IT |